|

Currently, I am a research intern (筋斗云) at ByteDance, focusing on AIGC and MLLM.

Previously, I

interned at Tencent from 2022 to 2024, and at StepFun from 2024 to

2025, where I was advised by Dr. Gang Yu. Expected graduation in 2026, open to postdoc and research scientist opportunities. Email / CV / Google Scholar / Github / WeChat |

🥰

🤡

|

|

|

My research interests are broadly in 3D/2D Computer Vision and Generative AI. My overarching research objective is to advance AI-driven methods that augment and amplify human creativity. Specifically, I aim to develop intelligent 3D/2D vision and generative models that enable humans to more intuitively create, edit, and control lifelike virtual avatars, scenes, and animations.

|

FlowAct-R1: Towards Interactive Humanoid Video Generation

FlowAct Team, ByteDance Intelligent Creation Tech Report, 2026 project page / arxiv We present FlowAct-R1, a novel framework that enables lifelike, responsive, and high-fidelity humanoid video generation for seamless real-time interaction. |

|

|

Bridging Your Imagination with Audio-Video Generation via a Unified

Director

Jiaxu Zhang, Tianshu Hu, Yuan Zhang, Zenan Li, Linjie Luo, Guosheng Lin*, Xin Chen* ArXiv, 2025 project page / arxiv UniMAGE unifies script drafting, extension, continuation, and keyframe image generation, thereby enabling coherent long-form storytelling with consistent characters and cinematic visual compositions. The generated scripts and keyframes can further serve as structured, high-level guidance for existing audio-video joint generation models. |

|

|

DreamDance: Animating Character Art via Inpainting Stable Gaussian

Worlds

Jiaxu Zhang, Xianfang Zeng, Xin Chen, Wei Zuo, Gang Yu*, Guosheng Lin, Zhigang Tu* ArXiv, 2025 project page / code / arxiv We propose DreamDance, a novel paradigm that reformulates the character art animation task into two inpainting based steps: Camera-aware Scene Inpainting for stable scene reconstruction and Pose-aware Video Inpainting for dynamic character animation. |

|

MikuDance: Animating

Character Art with Mixed Motion Dynamics

Jiaxu Zhang, Xianfang Zeng, Xin Chen, Wei Zuo, Gang Yu*, Zhigang Tu* Proceedings of the International Conference on Computer Vision (ICCV, Oral), 2025 project page / code / arxiv We propose MikuDance, a diffusion-based pipeline incorporating mixed motion dynamics to animate stylized character art. |

|

Freehand-Genshin-Diffusion

A project for transforming Genshin PVs into a freehand style using Diffusion Model. I've been exploring 2D image animation recently. This project is purely for fun. Feel free to reach out and discuss this with me. |

|

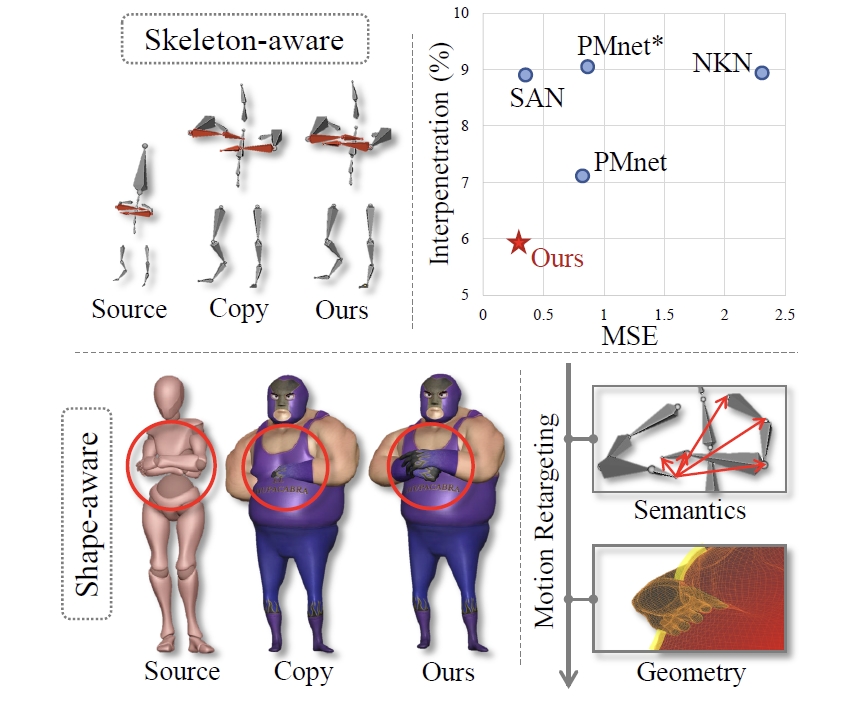

A Modular Neural Motion

Retargeting System Decoupling Skeleton and Shape

Perception

Jiaxu Zhang, Zhigang Tu*, Junwu Weng, Junsong Yuan, Bo Du IEEE Transactions on Pattern Analysis and Machine Intelligence (T-PAMI), 2024 code / arxiv M-R2ET is a modular neural motion retargeting system designed to transfer motion between characters with different structures but corresponding to homeomorphic graphs, meanwhile preserving motion semantics and perceiving shape geometries. |

|

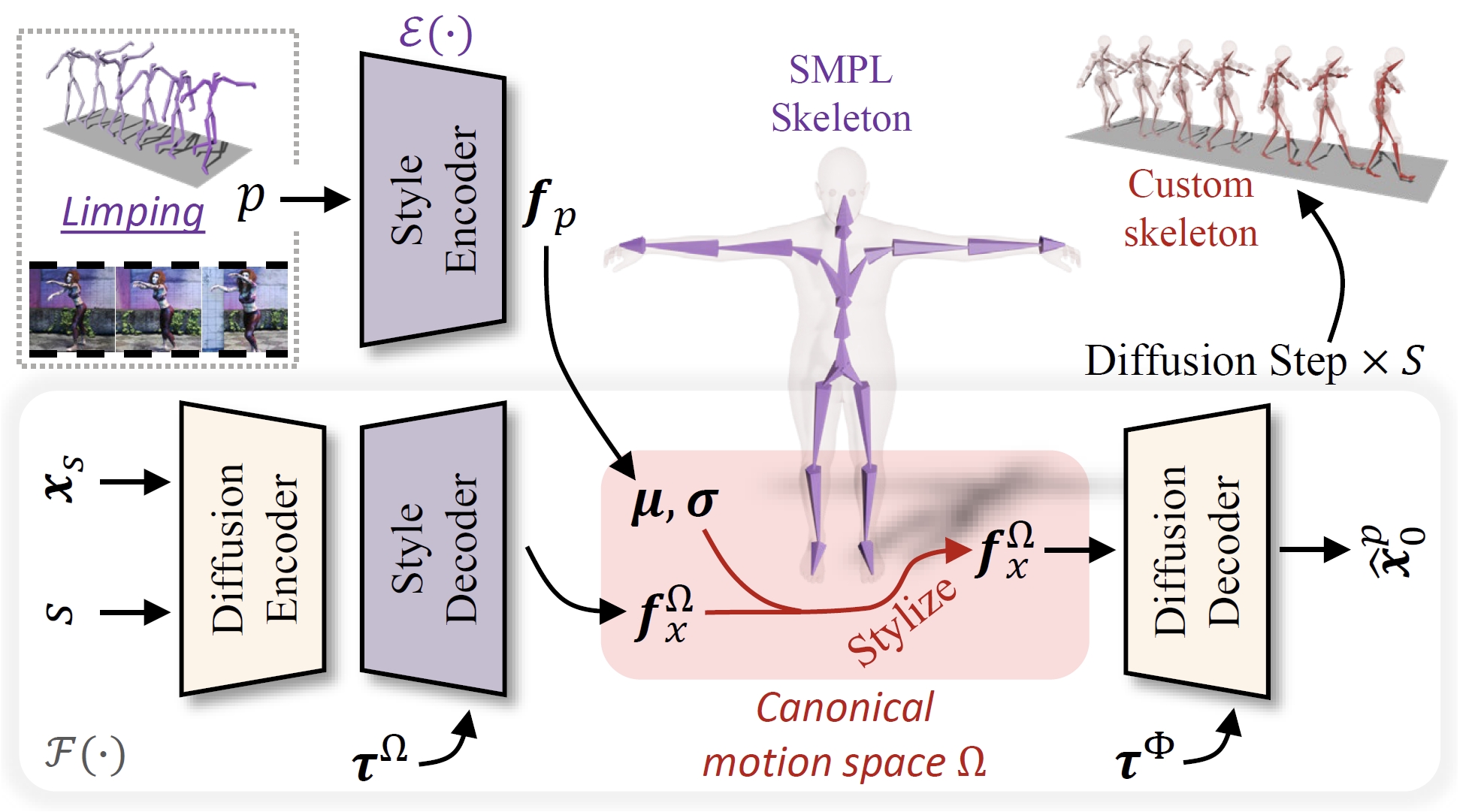

Generative Motion Stylization

of Cross-structure Characters within Canonical

Motion Space

Jiaxu Zhang, Xin Chen, Gang Yu, Zhigang Tu* Proceedings of the 32nd ACM International Conference on Multimedia (ACM MM), 2024 arxiv We present MotionS, a generative motion stylization pipeline for synthesizing diverse and stylized motion on cross-structure source using cross-modality style prompts. |

|

TapMo: Shape-aware Motion

Generation of Skeleton-free Characters

Jiaxu Zhang#, Shaoli Huang#, Zhigang Tu*, Xin Chen, Xiaohang Zhan, Gang Yu, Ying Shan The Twelfth International Conference on Learning Representations (ICLR), 2024 project page / code / arxiv TapMo is a text-based animation pipeline for generating motion in a wide variety of skeleton-free characters. |

|

Skinned Motion Retargeting

with Residual Perception of Motion Semantics &

Geometry

Jiaxu Zhang, Junwu Weng, Di Kang, Fang Zhao, Shaoli Huang, Xuefei Zhe, Linchao Bao, Ying Shan, Jue Wang, Zhigang Tu* Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023 project page / code / arxiv R2ET is a neural motion retargeting model that can preserve the source motion semantics and avoid interpenetration in the target motion. |

|

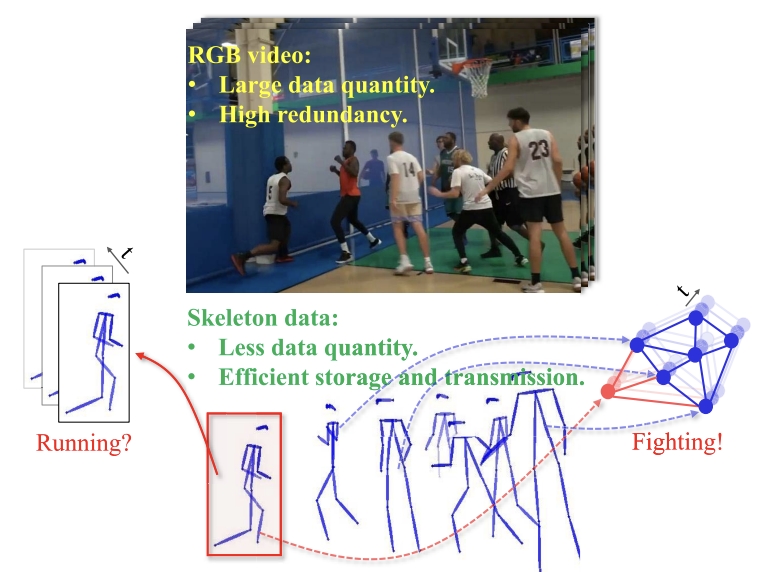

Zoom Transformer for

Skeleton-based Group Activity Recognition

Jiaxu Zhang, Yifan Jia, Wei Xie, and Zhigang Tu* IEEE Transactions on Circuits and Systems for Video Technology (T-CSVT), 2022 code / arxiv We propose a novel Zoom Transformer to exploit both the low-level single-person motion information and the high-level multi-person interaction information in a uniform attention structure. |

|

Joint-bone Fusion Graph

Convolutional Network for Semi-supervised Skeleton

Action Recognition

Zhigang Tu#, Jiaxu Zhang#*, Hongyan Li, Yujin Chen, and Junsong Yuan IEEE Transactions on Multimedia (T-MM), 2022 code / arxiv we propose a semi-supervised skeleton-based action recognition method. |

|

2025.06 - Present, Shenzhen Research Intern for AIGC and MLLM. Advisor: Dr. Xin Chen and Dr. Tianshu Hu |

|

2024.05 - 2025.06, Shanghai Research Intern for AIGC. Advisor: Dr. Gang Yu and Dr. Xianfang Zeng |

|

2023.06 - 2024.04, Shanghai Research Intern in Tencent PCG. Advisor: Dr. Gang Yu and Dr. Xin Chen 2022.07 - 2023.06, Shenzhen Research Intern in Tencent AI Lab. Advisor: Dr. Junwu Weng and Dr. Shaoli Huang |

|

2025.02 - Present, Singapore Joint-PhD Student Research Advisor: Prof. Guosheng Lin |

|

2020.09 - Present, Wuhan Ph.D Student in LIEMSARS. I received my Master Degree of Computer Technology in 2023. Research Advisor: Prof. Zhigang Tu |

|

2016.09 - 2020.06, Nanjing I received my B.S Degree of Geographic Information Science in 2020. GPA: 3.88/4.0, Rank: 1/26. 2018.11 - 2020.06, Nanjing Research assistant in Research Center of Complex Transportation Network (TLab). |

|

|

This homepage is designed based on Jon Barron's website and

deployed on Github

Pages. Last updated: Jan. 2026

© 2026 Jiaxu Zhang